Bots – The Good, The Bad and The Even Worse

Bots represent over 60 percent of all website traffic. This means that the majority of your website traffic could be coming from Internet bots, rather than humans. A bot is a software application that runs automated tasks over the Internet. Bots can be put into two categories, “good” and “bad.” Good bots visit websites to perform jobs, like search engine crawling, website health monitoring and website vulnerability scanning. Bad bots perform malicious tasks such as, DDoS attacks, website scraping and comment spam.

Exploring the Difference Between Good and Bad Bots

Good Bots

Good bots exist to monitor the web. For example, a “Googlebot” is Google’s web crawling bot, often referred to as a “spider.” Googlebots crawl the Internet for SEO purposes and discover new pages to add to the Google index. They use algorithms to determine which sites to crawl, how often to crawl and how many pages it should retrieve from each site. These bots make sure we’re being rewarded for our SEO efforts and penalize those who use black hat SEO techniques.

Bad Bots

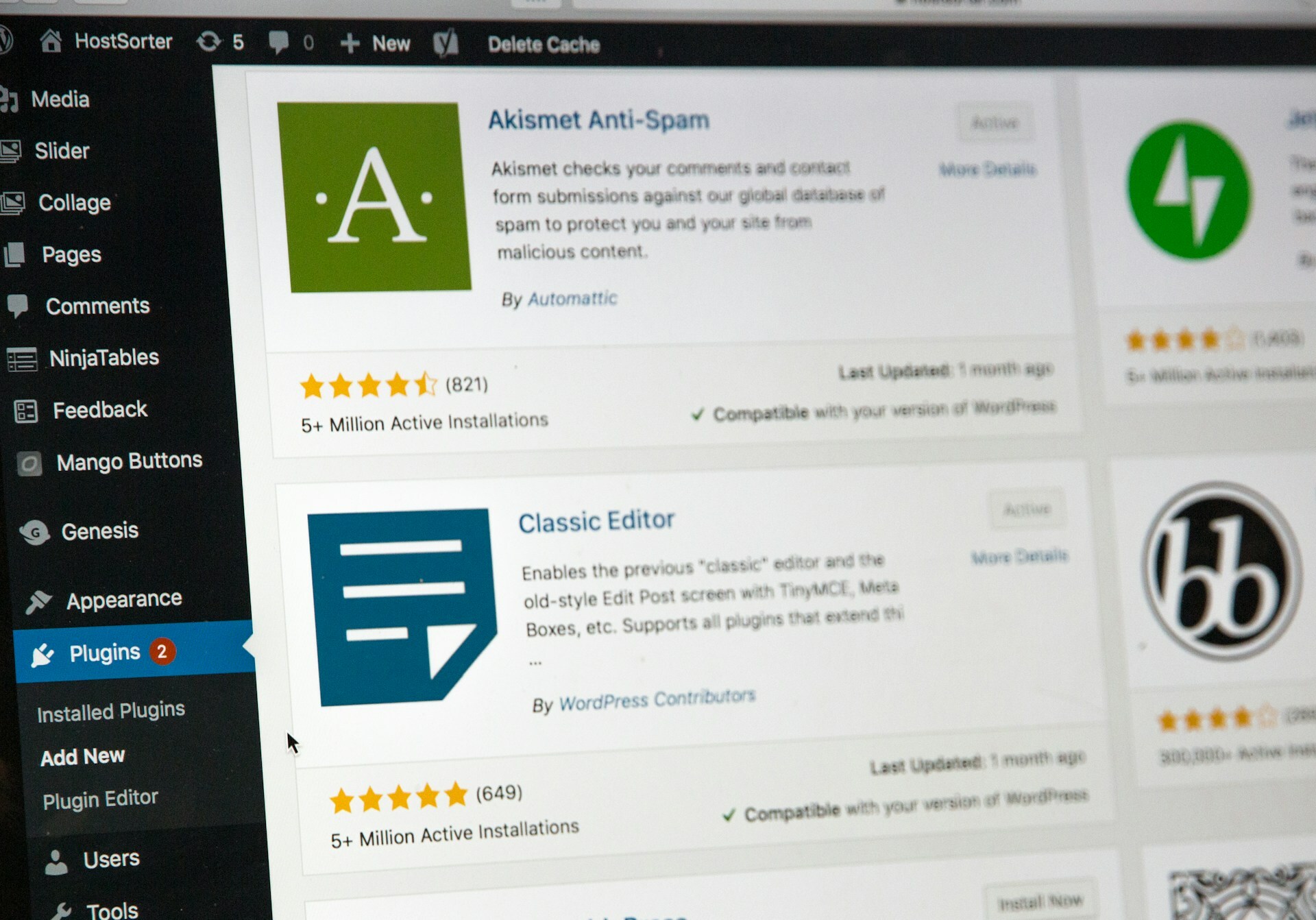

Bad bots represent over 35 percent of all bot traffic. Hackers execute bad bots to perform simple and repetitive tasks. These bots scan millions of websites and aim to steal website content, consume bandwidth and look for outdated software and plugins that they can use as a way into your website and database.

Website Scrapers

Scrapers are bad bots that “scrape” original content from reputable sites and publish it to another site without permission.

What’s the harm?

Search engines might view the scraped content as duplicate content, which can hurt SEO rankings. Scrapers grab your RSS feed so they know when you publish content, allowing them to copy and paste your content as soon as it’s posted. Unfortunately, search engines don’t care if the duplicate content was your doing or not, either way, you’ll be penalized.

Website Spammers

If you spend time reading blogs, you’ve probably spent some time perusing the comment section. Comment spam bots are bad bots that post spam in blog comments promoting items like shoes, cosmetics and Viagra.

What’s the harm?

Every day millions of useless spam pages are created. Comment spam bots link to items they’re promoting in hopes that the reader will click on the link, redirecting them to a spam website. Once the user is on the spam site, hackers attempt to gather information (such as credit card data) for later use or to sell for a profit.

Botnets And DDoS Attacks

DDoS, short for Distributed Denial of Service, is an attack that attempts to make a website unavailable by overwhelming it with traffic from multiple sources. DDoS attacks are often performed by botnets. A botnet (the combination of robot and network) is a network of private computers infected with malware.

What’s the harm?

A successful DDoS attack can, depending on the attack and how fast it takes to respond to the attack, take down a site for hours or days at a time. On average, a DDoS attack can cost a company anywhere from $50,000 to more than $400,000.

Blocking Bad Bots With Website Security

A web application firewall (WAF) can differentiate human traffic from bot traffic. A WAF will evaluate traffic based on its origin, behavior and the information it’s requesting. If it thinks the traffic is human traffic or “good” bot traffic, it will let it through. If the WAF suspects the traffic attempting to enter your site are spam bots, scrapers or botnets, access will be denied.

Website scanners help scan your website for spam, malware and vulnerabilities. SiteLock scanners are designed to identify website spam and will scan a website’s IP and domain against spam databases to check if it’s listed as a spammer. If the IP is found, SiteLock will alert the website owner immediately.

Give SiteLock a call at 855.378.6200 to learn more about how using a web application firewall can protect your website from bots. While you’re at it, don’t forget to ask about how our website scanner can help identify vulnerabilities and malware on your website.